Responsible Data, Responsive AI: What Agencies Need to Know

Innovation is at the heart of what digital agencies do, so it’s no surprise that executives and developers alike are getting swept up in the promises that AI offers. But, as with all new and (in the minds of some) mysterious technologies, we should be conscious to apply the brakes sometimes - at least for long enough to ask if we actually know what we are doing.

To put it a different way, you wouldn’t get behind the wheel of a car unless you knew how to drive - even a self-driving, supposedly autonomous car. And that’s because there still needs to be a human in the driver’s seat, monitoring the outcomes and applying judgment.

As yet, no company has succeeded in creating a completely autonomous car - and this is a helpful analogy to apply to AI augmentation in general. Humans are still ultimately responsible for the outcomes that result from using AI. It is only a tool after all. We have to make sure that we’re using it well.

If we make room for a little learning and best practice, we can expect to get more out of AI in the long run. We may have to adapt aspects of how we work and allow our mindsets to evolve as we adopt AI. But the benefits will far outweigh the initial effort.

- How are Agencies Already Using AI?

- Project Management and AI

- What Does Good Project Data Look Like?

- Responsible Data, Responsive AI

How are Agencies Already Using AI?

In the agency space, many of us are likely to have come across AI in tools for specific tasks, like photo editing and copywriting. At present, it is still generally agreed that you’ll need a human to run their critical eye over the AI’s creation before you hit “publish” or send anything to a client. But these apps are becoming increasingly sophisticated. AI-augmented photo editors are able to power through routine retouches far faster than a person, while still delivering the same visual quality. AI copywriting apps are sounding more and more natural as time goes on.

But there is a clear reason why it has been possible to create these refined applications. Take a moment to consider the sheer amount of content online - images, text, videos. This is all potential data that could be used to train AI. One example of this in action is Google’s BERT natural language model, which was trained on data that includes the entirety of English-language Wikipedia - an enormous 2,500 million words.

That is an incredible amount of data, so it’s no wonder that AI algorithms can produce some incredibly accurate results when required to perform tasks like image classification or natural language processing.

But what if your aspirations for AI are a bit more context-specific? It might be possible for your copywriters, photographers and designers to already get a bit of assistance from AI. Can your project managers or operations leaders expect the same at some point down the line?

Project Management and AI

The fact that AI needs to be trained on datasets is, in some respects, one of its great limitations. You might have a brilliant, innovative application for AI in mind, but if the dataset you need does not exist, you need to first gather the data before you can get your idea off the ground. In the context of project operations, this means that, if you want an AI that can provide meaningful project management assistance, you need to have a bank of data from projects that you have previously worked on.

For agencies and other project-based businesses that have been established for a while, this sounds like a simple requirement to fulfill. You’ve worked on countless projects, and so you probably have plenty of data about how those projects were run - which projects were late, which were delivered underbudget, and so on.

The trouble is, this may not be usable data. What format is it in? Is it current, or out of date? Is it all in one place, or scattered across multiple systems? Many issues can stand in the way. And rectifying these problems manually can be a mammoth task.

However, if you want to get the most out of AI, you cannot simply ignore your data problems. In the world of AI development, “garbage in = garbage out” is a truism. It’s crucial to understand that AI is not a conscious actor; it has no ability to approach data with criticality. And so it falls to humans to use their ability to fact-check, course-correct, and use judgment. We decide what data goes into training an AI, and when we make mistakes in this process, we get problems like algorithmic bias—the result of feeding an AI on biased data sets.

There have been several high-profile cases of this, such as Amazon’s biased hiring AI (now scrapped) which discriminated against women applying for technical jobs, and the UK government’s use of an algorithm that downgraded the exam results of students from lower socio-economic backgrounds. In both instances, the bodies behind the AI had to take responsibility for the human consequences that resulted from their use of biased data.

Of course, using AI to assist in managing your projects is unlikely to be fraught with quite these kinds of ethical obligations and potential pitfalls. But these cases illustrate an important point: train AI on bad data, and expect a result that can cause more harm than good. In the world of project management, this might involve, for example, wildly inaccurate estimations and predictions. The result might be that your clients end up with unrealistic expectations about what can be produced by when, and for how much.

What Does Good Project Data Look Like?

Now that we’ve outlined the pitfalls of training AI on bad data, we have to be clear and ask: what does good data look like?

If you work in project management or delivery, you're probably an expert at collecting data on the performance of your projects. You know which projects are underperforming, which are over budget, and what's dragging them down. But a lot of the time, that's just the tip of the iceberg.

If you want to get a clear picture of how your projects run on a day-to-day basis, you need to do more than just look at the big picture. You need to dig deeper and get into the granular details. What type of work tends to overrun? What skills does this work involve? Are there any skills that are continually overutilized? Are any consistently underutilized? Perhaps the projects that go pearshaped tend to experience problems at consistent points? Can you notice any patterns?

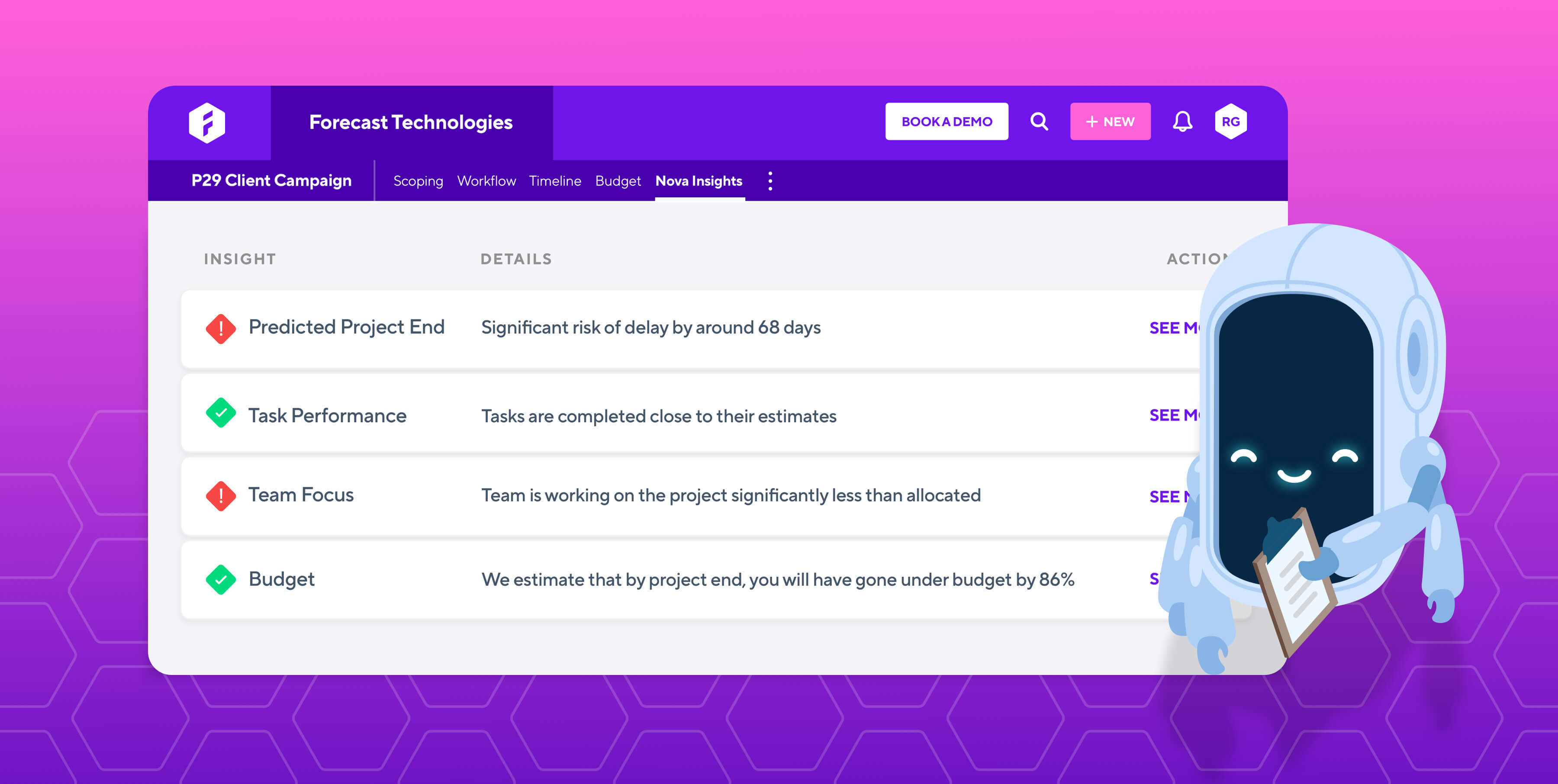

By surfacing this kind of data, you can build a true-to-life picture of your project operations from start to finish. And if an AI was trained on data of this quality and detail, it might also be able to get a good idea of how projects are run in your organization. Wouldn’t it be useful if the AI was able to spot when a project was starting to go awry before anyone else even noticed? Or if the AI gave you warnings in plenty of time if it looked like a critical task was going to overrun?

If this all sounds a little too advanced, here’s the thing: it’s closer to reality than you might expect. But the unavoidable point still stands that the AI’s project management predictions and assistance will only ever be dependable if it is trained on high-quality data. And with so many potential data points to capture in project management, the risk of error cannot be discounted. We have to take it seriously.

But people do not intuitively appreciate the value of good data. On average, 47% of newly created data records have one error that can be deemed critical (which is to say, a work-impacting error). Though this is frustrating, it is understandable on many levels. This might sound obvious, but people are just not very good at inputting data. When we are tired, we are prone to make mistakes. Clunky UI design can confuse or frustrate us. We get bored; our minds wander. Data entry is not something many people enjoy doing, and the results reflect that.

The fact is that software designed to gather data will nearly always do a better job than a person at inputting data. And this is nothing to be ashamed of. We are not computers; data inputting just doesn't play to our strengths as humans.

This means that the surest way to gather good project data is to manage your projects through a system that collects and processes data while you use it, reducing the amount of manual input as far as possible. And where manual input is needed (inputting time registrations against tasks, for instance), a simple, well-designed UI can support us in making consistent entries. Forecast draws on both of these principles - it is built with machine learning in mind, and so it is designed to produce data that supports this goal.

And with this quality project data, Forecast is doing big things. Suffice to say that managing complex agency projects is about to get a lot easier.

A little helping hand from Forecast's AI...

Responsible Data = Responsive AI

It wouldn’t be inaccurate to say that AI is only as smart as we allow it to be. If we want AI to help us with as complex and varied a task as project management, we need to train it on data that captures all of this messy, changeable complexity in fine detail. It isn't impossible, but it does present a significant challenge.

But the alternative is no use either: if we fail to provide AI with this level of data, and expect it to work just as well, we’ll have nobody to blame but ourselves when its predictions are wrong or its suggestions are ludicrous. But rather than being intimidated by this, we should embrace the possibilities. Ultimately, we are in control of the AI-driven future. We just need to take data seriously.

Forecast's AI-enabled project management platform could be the ideal on-ramp for introducing AI into your organization. Ensuring high-quality, usable data with Forecast is simple, as it is designed from the ground up with data hygiene and governance in mind. If you're curious, sign up for a free 14-day trial below, and start getting excited for the future of AI-augmented project management!

You might like to read these articles on our blog..

Subscribe to the Forecast Newsletter

Get a monthly roundup of productivity tips & hacks delivered straight to your inbox